langgraph实现 handsoff between agents 模式 (2)

【代码】langgraph实现 handsoff between agents 模式 (2)

·

目录

Using with a custom agent

from typing_extensions import Literal

from langchain_core.messages import ToolMessage

from langchain_core.tools import tool

from langgraph.graph import MessagesState, StateGraph, START

from langgraph.types import Command

def make_agent(model, tools, system_prompt=None):

model_with_tools = model.bind_tools(tools)

tools_by_name = {tool.name: tool for tool in tools}

def call_model(state: MessagesState) -> Command[Literal["call_tools", "__end__"]]:

messages = state["messages"]

if system_prompt:

messages = [{"role": "system", "content": system_prompt}] + messages

response = model_with_tools.invoke(messages)

if len(response.tool_calls) > 0:

return Command(goto="call_tools", update={"messages": [response]})

return {"messages": [response]}

# NOTE: this is a simplified version of the prebuilt ToolNode

# If you want to have a tool node that has full feature parity, please refer to the source code

def call_tools(state: MessagesState) -> Command[Literal["call_model"]]:

tool_calls = state["messages"][-1].tool_calls

results = []

for tool_call in tool_calls:

tool_ = tools_by_name[tool_call["name"]]

tool_input_fields = tool_.get_input_schema().model_json_schema()[

"properties"

]

# this is simplified for demonstration purposes and

# is different from the ToolNode implementation

if "state" in tool_input_fields:

# inject state

tool_call = {**tool_call, "args": {**tool_call["args"], "state": state}}

tool_response = tool_.invoke(tool_call)

if isinstance(tool_response, ToolMessage):

results.append(Command(update={"messages": [tool_response]}))

# handle tools that return Command directly

elif isinstance(tool_response, Command):

results.append(tool_response)

# NOTE: nodes in LangGraph allow you to return list of updates, including Command objects

return results

graph = StateGraph(MessagesState)

graph.add_node(call_model)

graph.add_node(call_tools)

graph.add_edge(START, "call_model")

graph.add_edge("call_tools", "call_model")

return graph.compile()

Define tools

@tool

def add(a: int, b: int) -> int:

"""Adds two numbers."""

return a + b

@tool

def multiply(a: int, b: int) -> int:

"""Multiplies two numbers."""

return a * b

Create an agent

from langchain_openai import ChatOpenAI

model = ChatOpenAI(

temperature=0,

model="GLM-4-PLUS",

openai_api_key="your api key",

openai_api_base="https://open.bigmodel.cn/api/paas/v4/"

)

agent = make_agent(model, [add, multiply])

from langchain_core.messages import convert_to_messages

def pretty_print_messages(update):

if isinstance(update, tuple):

ns, update = update

# skip parent graph updates in the printouts

if len(ns) == 0:

return

graph_id = ns[-1].split(":")[0]

print(f"Update from subgraph {graph_id}:")

print("\n")

for node_name, node_update in update.items():

print(f"Update from node {node_name}:")

print("\n")

for m in convert_to_messages(node_update["messages"]):

m.pretty_print()

print("\n")

for chunk in agent.stream({"messages": [("user", "what's (3 + 5) * 12")]}):

pretty_print_messages(chunk)

Update from node call_model:

==================================[1m Ai Message [0m==================================

Tool Calls:

add (call_-9024510033517604232)

Call ID: call_-9024510033517604232

Args:

a: 3

b: 5

Update from node call_tools:

=================================[1m Tool Message [0m=================================

Name: add

8

Update from node call_model:

==================================[1m Ai Message [0m==================================

Tool Calls:

multiply (call_-9024516080833702606)

Call ID: call_-9024516080833702606

Args:

a: 8

b: 12

Update from node call_tools:

=================================[1m Tool Message [0m=================================

Name: multiply

96

Update from node call_model:

==================================[1m Ai Message [0m==================================

The result of (3 + 5) * 12 is 96.

Define handoff tools

from typing import Annotated

from langchain_core.tools import tool

from langchain_core.tools.base import InjectedToolCallId

from langgraph.prebuilt import InjectedState

def make_handoff_tool(*, agent_name: str):

"""Create a tool that can return handoff via a Command"""

tool_name = f"transfer_to_{agent_name}"

@tool(tool_name)

def handoff_to_agent(

# # optionally pass current graph state to the tool (will be ignored by the LLM)

state: Annotated[dict, InjectedState],

# optionally pass the current tool call ID (will be ignored by the LLM)

tool_call_id: Annotated[str, InjectedToolCallId],

):

"""Ask another agent for help."""

tool_message = {

"role": "tool",

"content": f"Successfully transferred to {agent_name}",

"name": tool_name,

"tool_call_id": tool_call_id,

}

return Command(

# navigate to another agent node in the PARENT graph

goto=agent_name,

graph=Command.PARENT,

# This is the state update that the agent `agent_name` will see when it is invoked.

# We're passing agent's FULL internal message history AND adding a tool message to make sure

# the resulting chat history is valid. See the paragraph above for more information.

update={"messages": state["messages"] + [tool_message]},

)

return handoff_to_agent

Define agents and graph

addition_expert = make_agent(

model,

[add, make_handoff_tool(agent_name="multiplication_expert")],

system_prompt="You are an addition expert, you can ask the multiplication expert for help with multiplication.",

)

multiplication_expert = make_agent(

model,

[multiply, make_handoff_tool(agent_name="addition_expert")],

system_prompt="You are a multiplication expert, you can ask an addition expert for help with addition.",

)

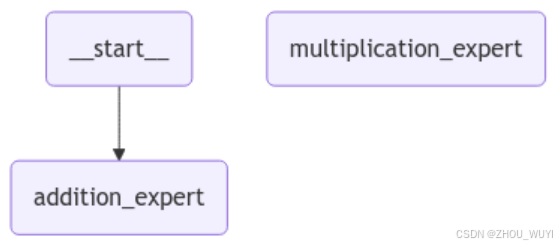

builder = StateGraph(MessagesState)

builder.add_node("addition_expert", addition_expert)

builder.add_node("multiplication_expert", multiplication_expert)

builder.add_edge(START, "addition_expert")

graph = builder.compile()

Visualize the graph

from IPython.display import Image, display

display(Image(graph.get_graph().draw_mermaid_png()))

Run the graph

for chunk in graph.stream(

{"messages": [("user", "what's (3 + 5) * 12")]}, subgraphs=True

):

pretty_print_messages(chunk)

Update from subgraph addition_expert:

Update from node call_model:

==================================[1m Ai Message [0m==================================

Tool Calls:

transfer_to_multiplication_expert (call_-9024520341442308872)

Call ID: call_-9024520341442308872

Args:

Update from subgraph multiplication_expert:

Update from node call_model:

==================================[1m Ai Message [0m==================================

Tool Calls:

transfer_to_addition_expert (call_-9024514672083873280)

Call ID: call_-9024514672083873280

Args:

Update from subgraph addition_expert:

Update from node call_model:

==================================[1m Ai Message [0m==================================

Tool Calls:

add (call_-9024512129462455947)

Call ID: call_-9024512129462455947

Args:

a: 3

b: 5

Update from subgraph addition_expert:

Update from node call_tools:

=================================[1m Tool Message [0m=================================

Name: add

8

Update from subgraph addition_expert:

Update from node call_model:

==================================[1m Ai Message [0m==================================

The result of (3 + 5) * 12 is 96.

Using prebuilt agents

from langgraph.prebuilt import create_react_agent

addition_expert = create_react_agent(

model,

[add, make_handoff_tool(agent_name="multiplication_expert")],

prompt="You are an addition expert, you can ask the multiplication expert for help with multiplication.",

)

multiplication_expert = create_react_agent(

model,

[multiply, make_handoff_tool(agent_name="addition_expert")],

prompt="You are a multiplication expert, you can ask an addition expert for help with addition.",

)

builder = StateGraph(MessagesState)

builder.add_node("addition_expert", addition_expert)

builder.add_node("multiplication_expert", multiplication_expert)

builder.add_edge(START, "addition_expert")

graph = builder.compile()

run the graph

for chunk in graph.stream(

{"messages": [("user", "what's (3 + 5) * 12")]}, subgraphs=True

):

pretty_print_messages(chunk)

Update from subgraph addition_expert:

Update from node agent:

==================================[1m Ai Message [0m==================================

Tool Calls:

transfer_to_multiplication_expert (call_-9024512232541694769)

Call ID: call_-9024512232541694769

Args:

Update from subgraph multiplication_expert:

Update from node agent:

==================================[1m Ai Message [0m==================================

Tool Calls:

transfer_to_addition_expert (call_-9024517867540713141)

Call ID: call_-9024517867540713141

Args:

Update from subgraph addition_expert:

Update from node agent:

==================================[1m Ai Message [0m==================================

Tool Calls:

add (call_-9024509037084815237)

Call ID: call_-9024509037084815237

Args:

a: 3

b: 5

Update from subgraph addition_expert:

Update from node tools:

=================================[1m Tool Message [0m=================================

Name: add

8

Update from subgraph addition_expert:

Update from node agent:

==================================[1m Ai Message [0m==================================

The result of (3 + 5) * 12 is 96.

another example

from langgraph.prebuilt import create_react_agent

addition_expert = create_react_agent(

model,

[add, make_handoff_tool(agent_name="English_expert")],

prompt="You only speak in English, you can ask the Chinese expert for help with Chinese.",

)

multiplication_expert = create_react_agent(

model,

[multiply, make_handoff_tool(agent_name="中文专家")],

prompt="你只讲中文,遇到英文问题,向别的agent请求帮助",

)

builder = StateGraph(MessagesState)

builder.add_node("addition_expert", addition_expert)

builder.add_node("multiplication_expert", multiplication_expert)

builder.add_edge(START, "addition_expert")

graph = builder.compile()

for chunk in graph.stream(

{"messages": [("user", "what's deepseek")]}, subgraphs=True

):

pretty_print_messages(chunk)

Update from subgraph addition_expert:

Update from node agent:

==================================[1m Ai Message [0m==================================

"Deepseek" isn't a widely recognized term in popular culture or technology as of my last update in early 2023. It could be a misspelling, a specific term used in a niche field, or a new concept that has emerged after my last update. Here are a few possibilities:

1. **Typo or Misspelling**: It might be a misspelling of a more common term like "deepfake," which refers to sophisticated AI-generated audio and video content that can mimic real people, or "deep learning," a subset of machine learning involving neural networks.

2. **Niche Term**: It could be a specific term used in a particular industry or academic field. For example, it might relate to a specific algorithm, software, or methodology in data science, AI, or another technical field.

3. **New Concept or Product**: If "deepseek" is a new term that has emerged recently, it might refer to a new technology, product, or service that wasn't widely known before 2023.

4. **Brand or Company Name**: It could also be the name of a company, brand, or product that is not widely recognized yet.

To get a more accurate answer, additional context would be helpful. If you have more details or a specific context in which you encountered the term "deepseek," please provide them, and I can give a more targeted response. Alternatively, searching online or checking recent publications might yield more current information.

参考链接:https://langchain-ai.github.io/langgraph/how-tos/agent-handoffs/#implement-a-handoff-tool

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)