目标检测模型预训练——自监督对比学习

将 proposal 作为 global patch,同时将 proposal 切分为不同的 local patch,构建了 local-local 对比损失和 global-local 对比损失,完成了对比学习与目标检测的结合。提出了新的代理任务,即随机的从原图中裁下一个 patch,将这个 patch 作为 query 输入到 DETR 的 decoder 中,希望模型可以在原图中找到这个 p

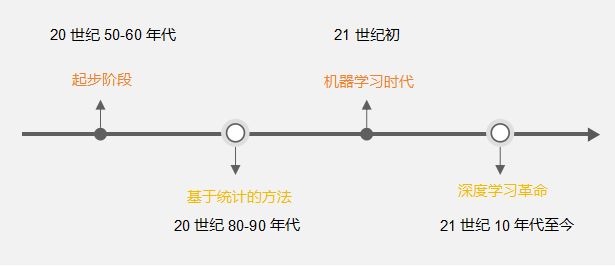

传统模型

Efficient Visual Pretraining with Contrastive Detection

文章:Efficient Visual Pretraining with Contrastive Detection (readpaper.com)

Point-Level Region Contrast for Object Detection Pre-Training

文章:Point-Level Region Contrast for Object Detection Pre-Training (readpaper.com)

采样点来替代此前方法中的 proposal,将不同区域内不同的采样点构建为样本对,以希望模型学习出更准确的特征表达。

Aligning pretraining for detection via object-level contrastive learning(SoCo)

文章:Aligning Pretraining for Detection via Object-Level Contrastive Learning (readpaper.com)

采用随机裁剪、调整大小的方法将同一个 proposal 构建为三个不同的视角,进而采用 Contrastive Loss 来约束这三个视角的样本在特征空间内的距离。

Detco: Unsupervised contrastive learning for object detection(DetCo)

文章:DetCo: Unsupervised Contrastive Learning for Object Detection (readpaper.com)

将 proposal 作为 global patch,同时将 proposal 切分为不同的 local patch,构建了 local-local 对比损失和 global-local 对比损失,完成了对比学习与目标检测的结合。

Dense Contrastive Learning for Self-Supervised Visual Pre-Training

文章:Dense Contrastive Learning for Self-Supervised Visual Pre-Training (readpaper.com)

DETR

Siamese DETR

文章:Siamese DETR (readpaper.com)

采用自监督对比学习 BYOL 的思想,提出了两个代理任务分别用于分类和定位来完成模型预训练,采用 EdgeBoxes 算法离线生成 proposal。

Detreg: Unsupervised pretraining with region priors for object detection

文章:DETReg: Unsupervised Pretraining with Region Priors for Object Detection (readpaper.com)

采用蒸馏的思想完成预训练过程,将 SwAV 预训练的模型作为 teacher 来完成分类任务的监督,使 DTER 学习到 SwAV 的特征嵌入。用 Selective Search 算法生成 proposal,作为定位任务的监督。

Up-detr: Unsupervised pre-training for object detection with transformers

文章:UP-DETR: Unsupervised Pre-training for Object Detection with Transformers (readpaper.com)

提出了新的代理任务,即随机的从原图中裁下一个 patch,将这个 patch 作为 query 输入到 DETR 的 decoder 中,希望模型可以在原图中找到这个 patch 的位置并完成分类。

Proposal-Contrastive Pretraining for Object Detection from Fewer Data

文章:Proposal-Contrastive Pretraining for Object Detection from Fewer Data (readpaper.com)

Proposal-Contrastive Pretraining for Object Detection from Fewer Data (iclr.cc)

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)